We all know by now that the Straits Times attempted to put ChatGPT to the sword. It recently challenged ChatGPT to the PSLE Science Exam, and found that the chatbot was “miserable” at it, scoring an average of 21⁄100 for the PSLE 2020 to PSLE 2022 Science exam.

The reason for its failure is simple: the current publicly available version of ChatGPT is not adept at interpreting images, diagrams and graphs. When it comes to the PSLE Science, most multiple-choice and the open-ended questions are based on experiments and their results are recorded in image, graph and table forms. ChatGPT would not be able to understand and handle such questions.

In fact, text-based questions traditionally form around 20 to 25 out of 100 marks in the PSLE science exam, which explains why ChatGPT was only able to attain an average overall score of 21⁄100 for PSLE 2020 to PSLE 2022.

With Open AI already disclaiming that the current version of ChatGPT is unable to handle images, we did not find the Straits Times test to be a fair experiment (no pun intended). So we decided to give ChatGPT a second crack at PSLE Science. This time, we played to the chatbot’s strengths and capabilities by making sure to feed ChatGPT with only text-based multiple choice questions.

By and large, with sufficient prompting, the chatbot was able to arrive at the right answers. However, we found its explanations wanting in many respects. In other words, ChatGPT is a good student but not quite a good teacher.

We submitted 11 text-based and multiple-choice questions to ChatGPT, expecting accurate answers and explanations. Unfortunately, we found that it provided incorrect responses to 3 of the questions. What was even more surprising and worrying was that the explanations given were misleading.

We will explain with our experimental findings.

PSLE 2022 MCQ: 7⁄11

In 2022, only 11 MCQs were text-based questions or could be easily converted to text-based questions.

3 questions were answered wrongly and 1 question, though answered correctly, was explained inaccurately. They were questions 7,8, 9 and 16. We reproduce these questions in the following screenshots which show our conversations with ChatGPT:

Question 7

Correct Answer: 2

ChatGPT’s Answer: 3

To our amazement, ChatGPT fell short in a rather straightforward question. It answered wrongly in its first attempt but was able to correct itself after we pointed out its mistake.

Question 8

Correct Answer: C and D

ChatGPT’s Answer: C only

Yet again, ChatGPT disappointed us with a wrong answer. It admitted an “oversight” on its part and corrected itself after we pointed out what the right answer is. Its saving grace, however, is that it was able to provide a correct explanation for the answer given.

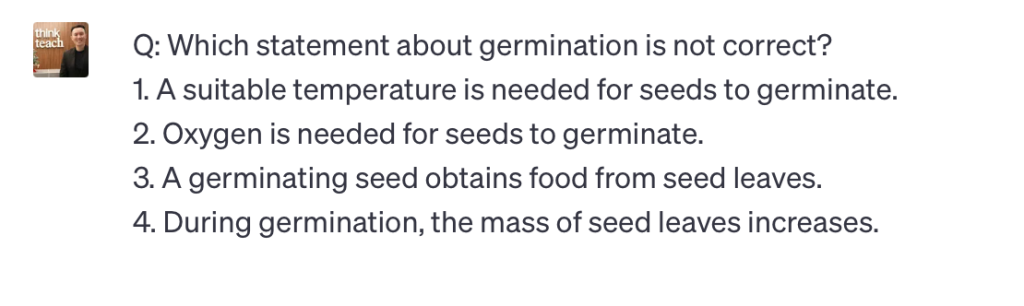

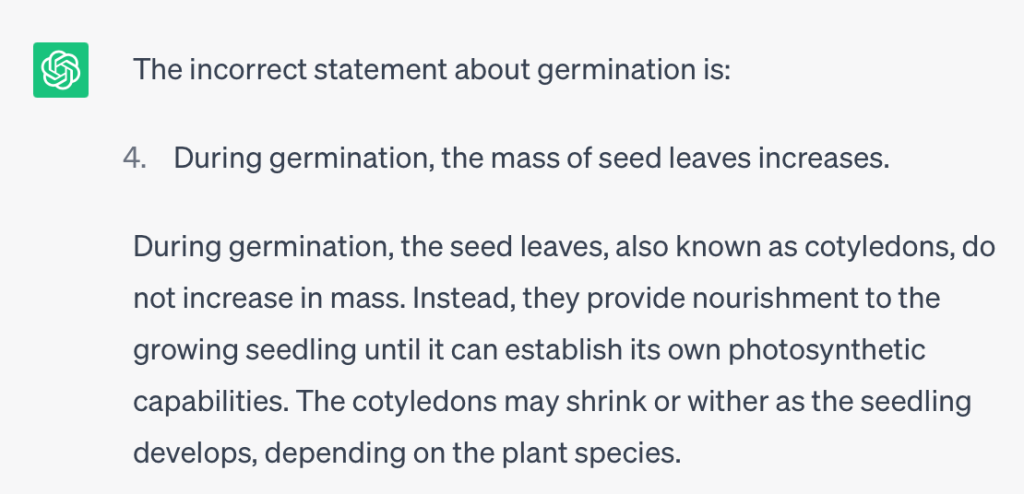

Question 9

Correct Answer: 4

ChatGPT’s Answer: 4 but Wrong Explanation

ChatGPT was able to identify that option (4) is the correct answer.

However, it fell short when asked to explain the answer to a primary school student. In Point 4, ChatGPT explained that the mass of seed leaves remains the same during germination. However, a PSLE student would have learnt that the mass of seed leaves decreases since the seedling would use up the food stored in the seed leaf. When this mistake was pointed out to it, ChatGPT was able to apologise for its error and correct itself.

Question 16

Correct Answer: 4

ChatGPT’s Answer: 3

Finally, for question 16, ChatGPT gave the wrong answer once again.

However, when its mistake was pointed out, ChatGPT was able to backpedal and answer the question correctly. But the damage has already been done. If a student was not wise to the mistake, he or she would have learnt something wrong from ChatGPT.

What we were able to conclude from our little fun experiment was that it is essential to exercise caution when using AI-powered chatbots such as ChatGPT. Not only is it advanced enough to handle complex diagrams or graphs, but it is also not 100% accurate all the time. The simple reason for this is that smart chatbots like ChatGPT learn from available information and data from the Internet. As we have all come to realise, not everything we see or hear is reliable on the Internet.

Concluding Remarks

So is ChatGPT even useful to students when it comes to the subject of Science? We will say yes, for older students, but will hesitate using it for primary school students. ChatGPT’s potential usefulness is more evident among tertiary-level students who already possess a solid foundation of Science knowledge and require the ability to extract information from a large volume of data. We have to be cognizant that the information provided by ChatGPT is not always aligned with the specific requirements of the PSLE Science syllabus in Singapore.

ChatGPT also has much room for improvement and occasionally makes conceptual mistakes. This poses a challenge for primary students who seek accurate learning resources tailored to the PSLE Science curriculum. In addition, ChatGPT does not equip primary students with the necessary writing techniques or emphasise the keywords and key phrases that are crucial for answering the Science examination open ended questions.

The other major issue with ChatGPT is that it is not a flexible teaching tool because you must always ask it the right questions. ChatGPT does not know you and cannot read your mind. It is thus only as effective as the questions you ask it. And therein lies the problem. Asking the right questions is the most difficult part. From our experience teaching so many different students from different schools, we understand students by and large do not know what they do not know. When students do not know what they do not know, they would naturally not know what to ask. As ChatGPT is unable to assess or diagnose students, it is powerless to provide any form of meaningful assistance on this front.

Considering some of these limitations, it becomes clear that an overreliance on ChatGPT is not advisable. Instead, a more comprehensive approach that incorporates relevant and targeted educational resources should be adopted to ensure primary students receive reliable and tailored support for their PSLE Science examination preparation.

This article was proudly written by TTA’s Science Team. We aim to help students achieve exam excellence in Science with our signature templated answering structures which will help your child tackle with ease and confidence for even the hardest of science questions.